From October 1st through 3rd I participated in ONTOBRAS 2018 (Seminar on Ontology Research in Brazil) in São Paulo, Brazil. As usual, in order to force myself to pay attention and to share the knowledge with others, I wrote quick summaries of the presentations that were given during the event.

ONTOBRAS 2018 papers are available as CEUR-WS proceedings.

Day 1: Monday, October 1st

My participation in the conference started with a mixup: I thought the first session would not take place in the morning, so I purchase a flight that got to São Paulo at 10:30, which made me miss the first full papers session, unfortunately.

Session 2: Full Papers

The first paper in this session was from NEMO. Cássio Reginato presented the paper “Ontology Integration Approaches: A Systematic Mapping”, which reports on a systematic mapping of the literature on ontology integration approaches that work at the conceptual level. Starting from 591 non-duplicate entries, the participants in the mapping filtered it down to 15 publications that satisfied the inclusion criteria. Studying these publications, they answered 13 research questions previously established. Truth be told, I was reviewing the slides I was about to present later in the session, so I didn’t take a lot of notes during his talk. After his presentation, one of the questions from the audience mentioned an interesting concept, called MIREOT: the Minimum Information to Reference an External Ontology Term, maybe it’s worth checking out.

Next, Pedro Henrique Piccoli Richetti (UNIRIO) presented “A Measurement Ontology for Beliefs, Desires, Intentions and Feelings with Knowledge-intensive Processes”. The paper investigates the problem of analyzing Knowledge-intensive Processes (KiPs) using quantifiable measurements, specifically providing formal definitions to measure Beliefs, Desires, Intentions and Feelings (BDIFs). Reusing M-OPL (Measurement Ontology Pattern Language) and KIPO (KiP Ontology), the authors related Speech Acts theory with BDIFs to get to an ontology for BDIFs measurement. The ontology was evaluated using 20.500+ messages exchanged in the context of a ICT services company.

Session 3: Short Paper Pitchs

In this session, authors from short papers that will present their work during the poster session on Wednesday, had 5 minutes (actually increased to 10 because many authors couldn’t make it to the conference) to make a pitch and create interest on their work, drawing attention to their poster on Wednesday.

Marcos Fragomeni Padron (UNB) presented “Towards a conceptual model for Brazilian popular music representation”. The ontology describes the domain of Brazilian popular music in a broad context (nature, cultural context, conception, recording, performing), based on a previous work on this theme that included a literature review and interviews with 22 musicians. To build the ontology, they reused other conceptual models (IFLA, LRM, CIDOC CRM, FRBRoo) and other initiatives such as DOREMUS and The Music Ontology. The model was represented in OntoUML 2.0.

Cássio Reginato presented “Building an Ontology-Based Infrastructure to Support Environmental Quality Research: First Steps”, which is in the context of a project that aims to provide ontologies to formally define the vocabulary related to measurements of water quality of Rio Doce after the disaster in Mariana, MG. Given the amount of different ways of representing water quality information, they propose a bottom-up approach that starts from the data to be represented and helps modelers find existing ontologies that satisfy the requirements of the data and could be reused.

Julio Cesar dos Reis (UNICAMP) presented “Mapping Refinement based on Ontology Evolution: A Context-Matchin Approach”. The work proposes a refinement method of existing mappings in ontologies that need to be updated when such ontologies go through maintenance and evolution. They propose an algorithm that identifies the context of each concept and mappings in such a way that satisfies the initial goals.

Next, the paper “Ferramenta para anotação semântica de processos de negócio de uma redação jornalística” (“A semantic annotation tool for business processes in a newsroom”), written by Marcelo Da Fonseca, Edison Ishikawa, Benedito Medeiros Neto, Márcio Victorino and Edgard Oliveira from UNB was presented, but I couldn’t really understand what the project was about during the pitch. I took a photo of the poster later.

Ana Carolina Almeida (UERJ) presented “CM-OPL: an Ontology Pattern Language for the Configuration Management Task”. The work presents an Ontology Pattern Language (OPL) for COnfiguration Management based on CMTO (Calhau & Falbo) and UFO, with the purpose of facilitating ontology reuse in the domain of configuration management over various application domains (the work was illustrated with an example from car configuration management).

Reinaldo Almeida (UFBA) presented “Alinhamento entre a Semiótica de Peirce e de Deleuzi-Guattari na Geração de Significados”. The research focuses on the perception and conceptualization of ontologies, using concepts from Semiotics from Peirce combined with Transsemiotics from Deleuzi-Guattari. In other words, I coulnd’t understand a thing. 🙂

ONTOBRAS Keynote at CLEI/LACLO

One of the keynotes from the main event was given by Diego Calvanese, from the Free University of Bozen-Bolzano, Italy, which was an invited speaker from ONTOBRAS. Diego presented a talk called “Ontology-based Data Access and Integration: Relational Data and Beyond”.

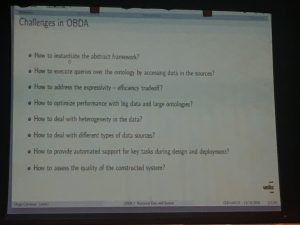

Diego started by mentioning the challenges of Big Data: the four V’s (Volume, Variety, Velocity, Veracity), with Variety being considered a more important aspect than Volume. He illustrated this with the example of Statoil: 50 million Euros to have experts in Geology and IT construct queries over a database to retrieve information they need. He then proposes, as a solution, to explore Semantics, using Ontology-based Data Access (OBDA), whose challenges are shown in the slide in the figure below.

The keynote focused on challenges 1, 2, 3 and 6 from the slides. Diego presented the question: which languages to use (expressivity vs. efficiency)? His answer: ontology in OWL 2 QL, queries in SPARQL (a well-behaved subset of SPARQL actually) and mapping (from database to OWL) in R2RML. He went on to explain each of these choices.

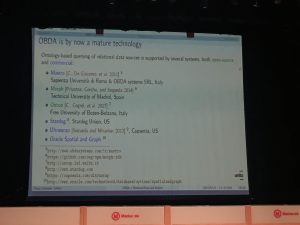

OBDA is the use of these technologies in order to convert queries done over the ontology to queries in SQL over the relational database then converting the relational results back to ontology instances. Many implementations (as you can see in the photo below). He then talked a bit about ONTOP, which is developed in UniBZ.

After an overview of what ONTOP already does in terms of OBDA (a more complete view was presented in his tutorial in the next day), Diego mentioned some directions in which they are extending OBDA: graphs, trees/noSQL, CSV files, textual data (possibly annotated), temporal/geospatial/streaming data, multiple heterogeneous data sources, richer querying (aggregation, analytics), more expressive power with reasoning, improved performance, friendlier user interfaces, and data management (e.g., updates) mediated by the ontology. Some of these are works in progress, some are future work.

He then talked a bit about one of the works in progress: representing temporal data in OBDA. The proposed approach considers static predicates A(d) and temporal predicates A(d)@[t1,t2], t1 and t2 being begin-end timestamps. Implementation is augmented with nonrecursive Datalog static rules and metric temporal rules with the nonrecursive fragment of DatalogMTL (for efficiency reasons). For the mapping, the database should have the temporal information as timestamps and use temporal mapping assertions to specify them. This is currently being implemented in ONTOP.

Another work in progress is related to multiple heterogeneous data sources, but he didn’t have the time to address this during the keynote.

One other interesting thing he mentioned was a graphical user interface for SPARQL to make query building easier for domain experts. He mentioned this was a result from the Optique Project. Something to check out…

Day 2: Tuesday, October 2nd

The second day started with “Ontology-based Data Access: A Tutorial”, given by Diego Calvanese. Diego started the tutorial explaining in detail how ONTOP, the OBDA solution presented in the previous day during his keynote, performs query rewriting. Basically, there are some inclusion assertions (rules in the ontology) that rewrite DL-Lite queries when they are applicable to parts of the query. The algorithm checks the applicability, applies the rewriting rule and adds the new query to a set of queries that will form a disjunction, until it is saturated. The algorithm is very efficient with respect to the size of the TBox and the ABox, but exponential in the size of the query, which is comparable to the efficiency of relational database queries. But this is only possible if we are constrained to DL-Lite (e.g., you cannot use UNION), such as when we use OWL2 QL profile.

He moved on to talk about querying an OBDA system. The idea is to apply a similar process that can be done for queries and rewrite the mappings from the ontology to the database in order to obtain a saturated mapping, which is then optimized based on database constraints (especially foreign key constraints). ONTOP works by first rewriting the SPARQL query into a saturated SPARQL query, then unfold it into an SQL query by using the saturated mapping (which can be produced once and reused in all queries). Diego then explained how the derived SQL query can be optimized in many different ways.

Sessions 4 (Doctoral/Masters Workshop) and 5 (Full Papers)

After Diego’s tutorial, there was a session from the Doctoral Theses and Masters Dissertations Workshop before lunch and a full papers session after lunch, that I unfortunately had to miss because of a project meeting.

I arrived at the end of the full papers session, just to see Lucas Santos, from NEMO, present our work: “Using an Ontology Network for Data Integration: A Case in the Public Security Domain”, which is result of a project that I happen to coordinate at NEMO. This work proposes a core ontology on violent crime processes, representing the concepts that exist in legal processes for this type of crime, from the beginning of the investigation by the police until the eventual encarceration of the alledged criminal. Mappings between this ontology and conceptual models of information systems that support this process were made, all the models were implemented in OWL and ONTOP was used to allow us to perform SPARQL queries with strategic public security questions that required these information systems data to be integrated in order to be answered. Lucas did a very good job presenting the paper and there was a lot of interest from the audience during the questions period afterwards.

ONTOBRAS Internal Keynote #1

The programming followed with a keynote from João Paulo Andrade Almeira, from NEMO, entitled “Ontology-based Multi-level Conceptual Model”. João Paulo started by motivating multi-level modeling, when we want to talk not only about classes/types and their instances, but also types of types and so on (e.g., Cecil is an instance of Lion/Panthera Leo, which is an instance of Species, which is an instance of Taxonomic Rank) and capture properties of these high-level classes (e.g., instances of Species should be disjoint). He then presented how people usually model these multi-level models with two levels (e.g., using Powertypes) and the problems with using this kind of workaround. Proper multi-level modeling is required to avoid the problems that come with what he termed level blindness. He illustrated this with an analysis done with data from Wikidata that showed an alarming number of subclass and instance-of relations that fall into very simple anti-patterns that lead to wrong inferences (e.g., that Tim Berners-Lee is an instance of a Profession).

This motivated the PhD work from Victorio Carvalho, which is a micro-theory on this aspect of modeling that proposes a language revision for multi-level modeling and provides sanity checks. This theory can be summarized in: (1) individuals are those entities which cannot have instances; (2) first-order types are those whose instances are individuals; (3) second-order types are those whose instances are first-order types; and so on. Plus, the instance-of and specialization relations are used as usual and the theory defines that two types are the same if they have the same set of instances in all possible worlds (extensional equivalence). From these basic definitions, we infer that entities are stratified into orders/levels, instance-of cannot skip orders and specialization cannot cross order boundaries. This alone defines a few anti-patterns that help sanitize multi-level models (as done with Wikidata).

João Paulo further presented multi-level definitions such as partitioning (a higher-level type whose instances form a generalization set under the type it partitions that is disjoint and complete) and categorization (similar, but not necessarily disjoint and complete). He went on to explain how these and other multi-level concepts could be represented in UML using a profile. He concluded with current work in this research — fully integrate MLT as a microtheory of UFO and incorporate its UML profile into OntoUML — and with things that have been defined in MLT but he was not able to include in the talk. Interested readers can refer to the publications in the photo below.

Session 6: Doctoral/Masters Workshop

Last session of the day, four more presentations from PhD and Masters students in the Doctoral Theses and Masters Dissertations Workshop.

First, Bobiquins Estêvão de Mello (PhD candidate from UFSC) presented “Ontologia do Monoteísmo – OntoM.org“ (“OntoM.org – An Ontology of Monotheism”). Estêvão started by justifying the purpose of an ontology for Monotheism, which could help different Monotheism doctrines to converse among themselves (and maybe avoid so many struggles, like in the past). The research question, then, is: “How to represent knowledge from this domain using foundational ontologies and philosophical and fenomenological theories without a religious bias?” The method starts from a literary corpus composed of institutional texts from four major monotheist religions, and is then divided in four stages: perception (based on the work of Edgar Morin), conceptualization (based on the work of Martin Heidegger), use of technology (based on OntoUML/UFO) and verification (based on the work of the Center for Religious Studies of Bruno Kessler Foundation, in Italy). The expected results are a knowledge base without religious bias (being based on the fenomenological theories of Heidegger), broaden the understanding of Monotheism in general, be base for new ontologies, apps, etc.

Débora Lina Nascimento Ciriaco Pereira (Masters candidate from IME-USP) presented “Semantic Integration of Health Databases in São Paulo: A Case Study With Conbgeital Anomalies”. The motivation of the work is that the health domain has large data repositories, glossaries and terminological standards, as well as independent, redundant, fragmented and non-interoperable information systems. In particular, SUS (the National Health System) in Brazil has 140+ health information systems, lacking a common identifier, each focusing on a different aspects of data, mostly focused on their particular events and not in the patient. The work is done in the context of a partnership with the City of São Paulo, with a particular focus on congenital anomalies (structural or functinoal anomalies that occur dugin intrauterine life), which is handled in 8 different information systems. Still, some questions cannot be answered automatically for lack of interoperation between these systems. The work, thus, consists on the integration of 3 of these information systems using both ONTOP and Linked Data Mashup, comparing the solutions and providing the integrated data in RDF format in order to be able to answer questions that the domain experts are interested in.

Mônica Cristina Barazzetti (Masters student from UTFPR) presented “Uma proposta de Visualizador de Ontologias para usuários Especialistas de domínio” (“A proposal for an Ontology Visualizer for Domain Expert Users”). The work is motivated by the difficulty for domain experts to visualize ontologies without the knowledge of technical (Computer Science-related) concepts from the Ontology field. A Systematic Review of ontology visualization tools revealed several limitations, such as using only tree-based layouts, no broad support for filtering, not available on the Web, etc. The work proposes a new tool for ontology visualization that works on these limitations.

Luan Fonseca Garcia (PhD candidate from UFRGS) presented “Uma Arquitetura Baseada em Ontologias para Composição Semântica de Workflows” (“An Architecture Based on Ontologies) for the Semantic Composition of Workflows”). The work is in the context of Oil Exploration, in particular focusing on models that represent oil reservoirs. A lot of data is generated in the processes related to this specific activity, using different support software with proprietary formats and the work of the domain expert is usually conducted in an ad-hoc way. The goals of his work, then, is to provide a framework capable of supporting semantically the workflow of domain experts on oil reservoirs. The proposed solution consists of an ontology network founded on BFO and composed of core ontologies on BPM and Geology, combined with ONTOP and relational databases in the technological layer.

Day 3: Wednesday, October 3rd

In the last day of the event, I managed to attend 2 papers sessions, a keynote from CLEI-LACLO and the two internal keynotes of ONTOBRAS itself.

Session 7: Full Papers

Fernanda Baião (PUC-RIO) presented “Applying Multi-Level Typing Theory in Knowledge-intensive Process Modeling”. In the context of Knowledge-intensive Processes (KiPs), Fernanda’s group has been working on KiPO, an ontology for KiPs. However, instantiating it into KiP models is not trivial and one of the aspects of this difficulty is the distinction between instances (instantiating the concepts of KiPO) and types (subclassing the concepts of KiPO). Analyzing the different proposals for multi-level modeling, they chose to use UFO-MLT (presented in João Paulo’s keynote in the previous day) and propose KiPO-ML (Knowledge-intensive Process Ontology – MultiLevel), extending KiPO with multiple levels of instantiation, systematizing the steps to apply MLT on KiPO with a focus on the KiPCO (KiP Core Ontology) sub-ontology. The proposal includes a naming pattern to help modelers choose appropriate names for multi-level concepts and a tool to analyze and validate KiP models according to KiPO-ML using Alloy Analyzer.

Elisângela Aganette (UFMG) presented “The Theory of Faceted Classification Applied to the Construction of Faceted Taxonomies”. Faceted Taxonomies offer a more structured model to facilitate reuse, in which concepts are not restricted to a single dimension. Its structure is divided in a set of taxonomies, each of which describing a different perspective. The work proposes to apply the Theory of Faceted Classification, proposed by Ranganathan in 1967, to the construction of faceted taxonomies in order to foster its reuse in the construction of thesauri and ontologies.

Gabriel Machado Lunardi (UFRGS) presented “Um Modelo Ontológico Probabilísitico Para Assistir Pessoas com Delínio Cognitivo” (“An Ontological Probabilistical Model to Assist People with Cognitive Decline”). The work is in the context of Ambient Assisted Living, specifically in the context of the Human Behavior Monitoring and Support (HBMS) project from the University of Alpen-Adria, in Austria, which uses OWL-DL to implement its models. However, this model doesn’t allow reasoning with uncertainty. Gabriel’s work fills this gap with an ontology and a probabilistic framework for reasoning (based on PR-OWL). The work was evaluated via a case study using data obtained by simulating a patient from an AAL home with HBMS support.

Cauã Antunes (UFRGS) presented “Ontologies in Category Theory: a search for meaningful morphims”. In the context of the Semantic Web, how to relate and integrate distinct ontologies? Existing works on ontology integration use Category Theory, which is a mathematical theory that focuses on relations, to foster the integration. Cauã proposes 5 criteria that helps construct semantically sound ontology morphisms (relations, in Category Theory lingo, if I understood correctly) and claims existing work only properly satisfy the first one. The criteria are a benchmark that can guide future work in this area.

CLEI/LACLO Keynote

Wayne Hodgins (Autodesk Fellow, currently retired and one of the founders of the LACLO conference), presented the keynote “Seeing the Future from the Past; Expect less, Hope more; Think different and exponentially bigger”. Wayne started talking about how we shifted from mass production to personalization, but we still do a lot of things based on sameness, which led to his idea of The Snowflake Effect.

He then reviewed how his life, the tech industry and the world in general changed for the last 12 years. Here are some things that did not exist in 2006 (year of the first LACLO conference): the iPhone, fiber optics, graphene, cellphone technology (2G-5G), tablets, nano robots, satellite communication, solar cells, IoT, 3D printing & scanning, touch screens, social media, etc. He made the case that the rate of change is exponential. Living in a World of Exponential Change means learn from the past, live in the present, imagine the future.

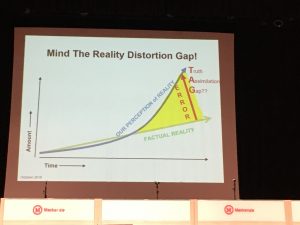

He moved on to propose that, just as there is a Technology Assimilation Gap (how long we take to assimilate new technology), and that is getting messy since the rate of change is exponential, there is also a Truth Assimilation Gap, which you can see in the photo below. He went on to argue that a lot of bad things have decreased a lot (exponentially), a lot of good things have increased a lot (exponentially), however our perception is that things are getting worse, because of the amount of information we are exposed to and because of the assimilation gap. To prove his point, he made a quick quiz with the audience and mentioned that very few people can get more than 50% of the answers correctly (I got 4 out of 13).

In conclusion, he asks: “so what?” His answers to this questions are: plan for you and your family and friends to live much longer; save and invest more for an indefinite life and health span; stop thinking of yourself as a worker, find what you love to do (you will live longer after retirement); cultivate readiness for the unexpected, new perspectives, no expectations, unbounded hopes; figure out what you really need in life and how to ask for it; take care of yourself first (airplane mask analogy); focus on innovation, not replication (“Flying is not about flapping faster, it’s about thinking differently”).

Session 8: Full Papers

Edelweis Rohrer (Universidad de la República, Uruguay) presented “Applying meta-modelling to an accounting application”. The work proposes two ontology models for the accounting domain: an ontological model (OM) and an ontological meta-model (OMM), which were applied on a case study in the accounting domain (a software called SIGGA). Functional and non-functional requirements for the models were established and then they were compared with respect to such requirements. OMM satisfied the requirements more completely, showing the need for meta-modeling in this application domain.

Felipe Pierin (IME-USP) presented “IntegraWeb: Uma proposta de arquietetura baseada em mapeamentos semânticos” (“IntegraWeb: An architecture based on semantic mappings”). Nowadays many services work with storing and correlating data. However, sometimes complementary information may be stored in different silos, sometimes fragmented, sometimes duplicated. The proposal, IntegraWeb, is an architecture that is similar to OBDA solutions such as ONTOP, but using the Web as the database. The architecture is divided in three layers: information retrieval, storage and presentation. Retrieval is done through the definition of rules to interpret the HTML content of the websites and the choice of an ontology to store the extracted information (e.g., Schema.org). Storage is done by checking duplicate and more up-to-date information and also establishing rules to infer characteristics (e.g., if something is near or far). Presentation is done by querying the data stored using SPARQL and presenting it in user-friendly Web view. Experiments were conducted to evaluate the integration of data from different sources, conflict resolution when facing duplicate information and combined queries. Felipe concluded with the limitation of the architecture, related to extracting data from HTML and limitations to data traffic on the Web.

ONTOBRAS Internal Keynote #2

Alexandre Rademaker (IBM) presented the keynote “Challenges in Natural Language Processing Understanding in O&G Domain”. Alexandre started by enumerating some of the challenges, such as: user expectations, data curation, establishing the goals for NLP/NLU, etc. There are statistical methods and symbolic methods, but both have their problems (some works combine both approaches). Deep learning has gaining a lot of momentum, but also expectations might be too high, this is just another machine learning method.

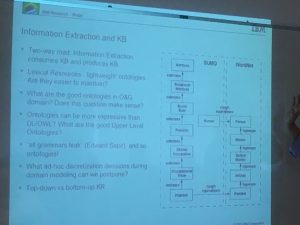

What are the alternatives to information extraction? One is annotation, training and evaluation: a lot of work envolving many domain experts, difficulty to deal with adjectives that change the meaning of things, but this is the most successfully used alternative nowadays. Another is linguistic motivated: the syntactic trees for annotation are mined from the analysis of the texts and the knowledge base is build incrementally, a multidisciplinary approach between Computer Science, Math, Linguistics and the particular domain. Alexandre has been working on this latter approach and presented some of the things he has been using. There was a lot of technical NLP stuff that I didn’t manage to include in my notes because I lack the foundations in this area of research, unfortunately.

In particular, he addressed the problem of ambiguity, which in terms of the technology he just presented (parsers that extract abstract syntax trees from natural language text), means having multiple trees produced from the same text. Using the wrong interpretation can have huge impact going forward, so what is usually done is to go forward with multiple trees, using some techniques, such as pruning, to lower the complexity. I took a photo of one of the slides that summarized the role of ontologies in his work.

In summary, they are using OWL ontologies which are project specific, conducting deep parsing with grammars, performing word-sense disambiguation via concept to predicates mapping and the semantic representations can be used in ML approaches for classification and/or knowledge representation. Please don’t ask me for any furhter info. 🙂

ONTOBRAS Invited Talk from ABIN

The last session I was able to attend (given my flight schedule and São Paulo’s infernal traffic) was an invited talk from a member of ABIN (Brazilian Intelligence Agency). Rafael mentioned a Presidential Decree from December 2017 that institutes the National Strategy for Intelligence in Brazil and mentions both big data and analytics.

He then went on to mention that ABIN extracts data from multiple sources: public documents (such as news), documents from partners in the intelligence system (e.g., the police), intelligence reports, structured databases from partners, etc. Different government agencies form the Brazilian Intelligence System (SISBIN) and ABIN is in the center of this, handling a lot of data and trying to extract information from it.

The challenges here are: lack of standardization in reports, data sources with varied formats, non-consensual concepts and ways of expressing them, data compartimentalization, analysts who are unwilling to share data in the system, etc. To identify concepts in non-structured texts, for example, they created an operational ontology (using Protégé) in the context of Operation Agata 7, which is concerned with border monitoring.

By means of an ordinance, they have created a task force to devise a shared and consensual vocabulary: the ABIN ontology, as well as requirements for technological solutions for link analysis. They used the Ontology 101 method and continued to use Protégé, evolving the border control ontology and broadened its scope to the whole ABIN organization. They are currently working on expanding it to the scope of SISBIN (including partner agencies).

They conducted several proofs-of-concept for this ontology, using a few interesting tools: i2 (link analysis, chronological analysis), IDSeg (textual analysis), Palantir (georreferencial analysis), IAI ELTA (?). What these tools have in common is that they need a properly constructed ontology in order to work well (which is what tool vendors don’t tell you when they sell you a tool that will supposably solve your semantic problems).

In the end, Rafael invited prof. Brito to talk about a work done in the field of counter-intelligence that was also based on ontologies. Unfortunately, we had to catch a cab to the airport and couldn’t stay for this part of the talk.

This was my first ONTOBRAS and it was an excellent conference! Congratulations to all involved in its organization and I hope I can join you every year for more fruitful discussion!